Introducing SELF-DISCOVER: An Efficient Machine Learning Framework for Models to Self-Discover a Reasoning Structure

This AI Paper from USC and Google Introduces SELF-DISCOVER: An Efficient Machine Learning Framework for Models to Self-Discover a Reasoning Structure for Any Task

Main Ideas:

The development of Large Language Models (LLMs) has advanced the capability of machines to produce texts, obey commands, and solve problems like human cognition.

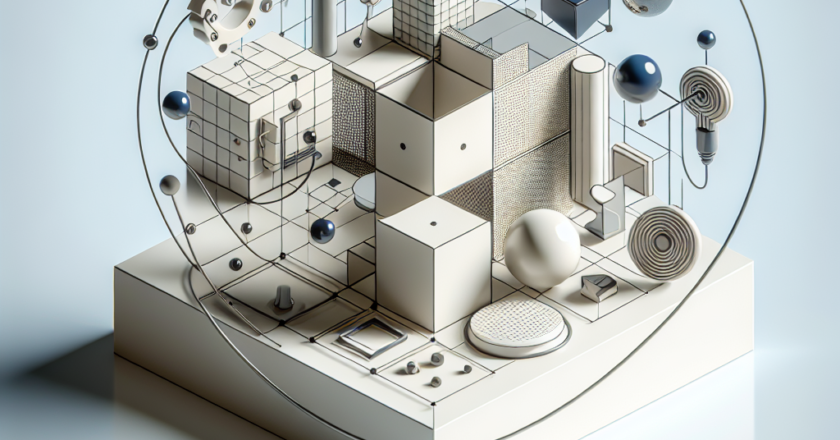

Researchers from the University of Southern California (USC) and Google have introduced a machine learning framework called SELF-DISCOVER.

SELF-DISCOVER enables models to self-discover a reasoning structure for any given task.

The framework utilizes techniques such as few-shot gradient-based meta-learning and a supervised fine-tuning process.

By leveraging SELF-DISCOVER, models can exhibit higher performance on a range of tasks while requiring minimal fine-t...