Summary:

Main Points:

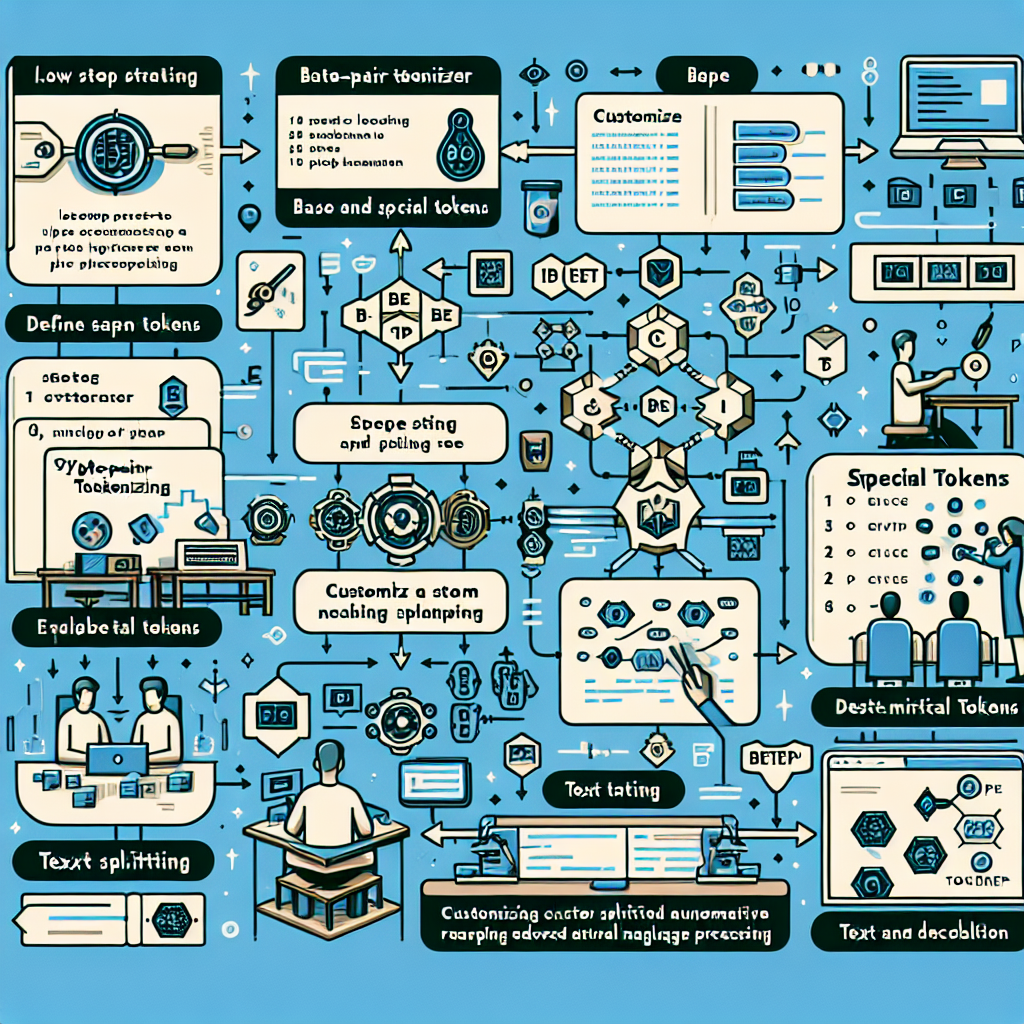

– Creating a custom tokenizer using the tiktoken library is explained step by step.

– The process includes loading a pre-trained tokenizer model, defining base and special tokens, and setting up a regular expression for token splitting.

– Functionality testing is done through text encoding and decoding using sample text.

Author’s Take:

Creating a custom tokenizer with Tiktoken offers a powerful tool for advancing Natural Language Processing tasks in Python. By following this comprehensive guide, users can efficiently set up their own tokenizer with unique features tailored to their specific needs. This tutorial equips individuals with the knowledge to enhance their NLP applications through customized tokenization processes.

Click here for the original article.