Key Points:

– Quantization is vital in deep learning to lower computational expenses and boost model efficiency.

– Large-scale language models require substantial processing power, underscoring the importance of quantization in reducing memory usage and enhancing inference speed.

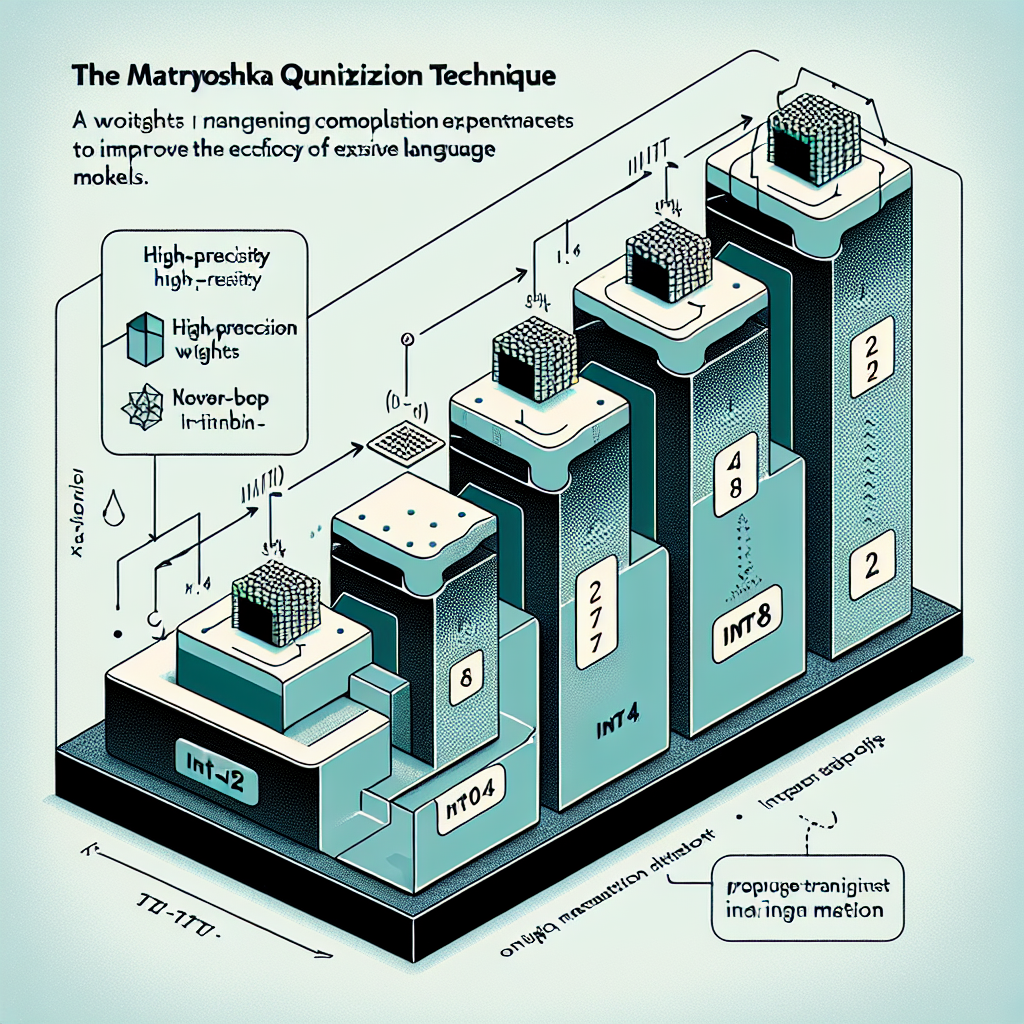

– The technique involves converting high-precision weights to lower-bit formats like int8, int4, or int2 to minimize storage requirements.

– Google DeepMind researchers have introduced Matryoshka quantization, aiming to optimize multi-precision models without compromising accuracy.

Author’s Take:

Google DeepMind’s proposal of Matryoshka quantization showcases a promising approach to enhancing deep learning efficiency by fine-tuning multi-precision models. This innovative technique highlights the continuous efforts within the AI community to push the boundaries of model optimization without sacrificing accuracy, setting a precedent for future advancements in the field of artificial intelligence.

Click here for the original article.